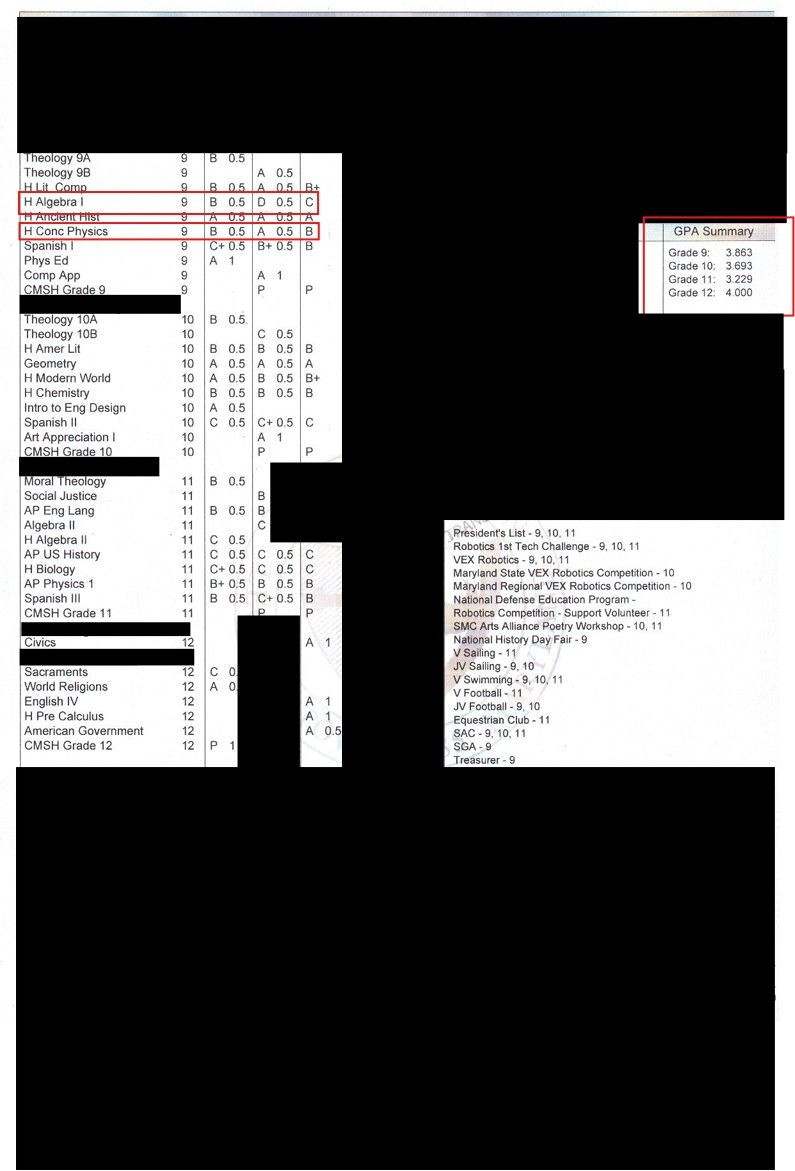

From College Laptop to AI Cluster: My Home Lab Evolution

Building a home lab is not just about hardware. It's a journey of growth, learning, and the occasional laugh at one’s nerdy excesses. As an IT engineer of many hats, I’ve spent years turning a mishmash of gear into a personal mini data centre. My lab’s story mirrors a trend many engineers follow: start small, scale up (sometimes way up), modernise, and adapt. In this article, I’ll walk through each phase of that evolution, from an old college laptop humming under my desk to a 42U retired rack, and finally (for now) two half-rack bristling with GPUs, severs, and networking gear. Along the way, I’ll share technical insights, lessons learned (often the hard way), and a bit of humour about living with your gear.

(As others have noted, building a home lab is one of the best ways to broaden your skills and even boost your career (

There’s Always Money in the Homelab | by Andrew Selig | Medium

) . It’s a chance to create a “mini data centre” at home and experiment with technologies you might not touch at work (

Trends in open source and building a home lab to create a mini data center | We Love Open Source - All Things Open

).

Phase 1: Humble Beginnings – The Old College Laptop Server (2015-2017)

Adam's Original Laptop Server - 2015

Adam's Original Laptop Server - 2015

Like many techies, my home lab journey began with zero budget and one beat-up machine. In my case, it was my old, beat-up laptop that had seen better days. Rather than recycle it, I gave it new life as a home server. I installed Linux on it and began hosting a personal website and some simple applications. This laptop-turned-server wasn’t powerful by any stretch, but it was free, and it worked. At least it worked most of the time with a sticky note on top to “never close this laptop”.

I learned a ton from this modest start. Managing a single Linux server taught me the basics of self-hosting: setting up a LAMP stack, securing SSH, scheduling cron jobs, and dealing with the inevitable crash when I tinkered a bit too much. The laptop’s ageing dual-core CPU and 4GB RAM meant I had to optimise whatever I ran. I couldn’t just throw resources at problems, so I became crafty with lightweight services. It was a crash course in system administration on a shoestring. And yes, the laptop did crash on occasion from overheating, so a strategically placed desk fan borrowed from the RA became its unofficial cooling system (much to the amusement of my roommates).

Fun Anecdote: At one point, I propped my server laptop on a piece of scrap aluminium I milled myself just to boost airflow and act as a small heat sink. My roommates said the whole setup looked like something MacGyver and a broke college student would co-design. That wasn’t far off from the truth. It wasn’t pretty by any stretch. In fact, it looked like it belonged in a gallery of desperate engineering. But it worked. That little Franken-server chugged through the summer heat with a desk fan like it was on life support and I was proud of it.

Looking back, this phase was all about learning fundamentals. With a single server, I had no redundancy and no fancy infrastructure. It was just raw experience and vibes. It forced me to understand exactly how things ran under the hood. I gained a healthy respect for backup configs and UPS units after a power flicker caused an abrupt shutdown and complete loss of data (along with a heart attack for me). Little did I know this was only the beginning, and things were about to scale in a big way.

Phase 2: A Slice of Pi - Early Experimentation with Raspberry Pi (2017-2020)

After the laptop proved I could host basic services, I got curious about those tiny Raspberry Pi computers I’d heard about around the engineering department and then started playing around with them in class. Here was a computer the size of a deck of cards, costing around $35, that could run Linux on a few watts of power. It sounded perfect for a home lab experiment. I grabbed a Raspberry Pi (Model 3 B at the time) from the local Microcenter and dove in.

Right away, the Ras Pi taught me new lessons. Its ARM processor and 1GB of RAM meant I had to be even more resource-conscious. I set it up as a home DNS server and ad-blocker using Pi-hole, joining a legion of hobbyists who use Ras Pis to improve their home network security (

There’s Always Money in the Homelab | by Andrew Selig | Medium

). Suddenly, the terrace I was living in with my roommates had network-wide ad blocking and improved DNS caching, all thanks to this little board. I also tried hosting a media server on the Ras Pi, but quickly discovered transcoding video on the old Pi was…well, painfully slow. The earliest Ras Pis excelled at small, always-on tasks (like a local Git server or an IoT hub for some smart lights I was tinkering with), but they weren’t cut out for anything heavy.

Still, there was a real thrill in this step. I had essentially built a server that fit in my palm. It was silent, sipped power, and never complained - a far cry from the old laptop’s whirring fan under my desk. This phase expanded my idea of what a home lab could be. It wasn’t just old PCs in a closet; it could include microcomputers wired together in creative ways. In fact, I started dreaming about building a cluster of Raspberry Pis one day, an idea I’d realise later.

Tech Sidebar:

Raspberry Pi Model 3 B (2016) – 1.2 GHz ARM CPU, 1 GB RAM, 100 Mbps Ethernet. Runs off 5V micro-USB power. Perfect for lightweight servers and DIY electronics projects. It’s amazing how much these little guys could do for their size and cost.

By the end of the Raspberry Pi experiment, my home lab had grown from one machine to two, and I had tasted the possibilities of scaling out with inexpensive hardware. But I still hungered for more power and flexibility. The next phase of the journey would take a decidedly enterprise turn, as I ventured into hardware that most people associate with corporate data centres.

Fun Anecdote: Around this time, I also got into digital coin mining with a partner who had a lot more experience. They would teach me a lot about hardware, software, and networking during our time together. This included the need for a full tent for cooling all the rigs! Many of these lessons would carry into the next step of my journey.

Original Mining Rig Setup - 2017

Original Mining Rig Setup - 2017

Upgraded Mining Rig Setup along with Networking and Pi Server - 2017

Upgraded Mining Rig Setup along with Networking and Pi Server - 2017

Phase 3: Enter the Big Iron - Three Dell R720 Servers Arrive (2020-2021)

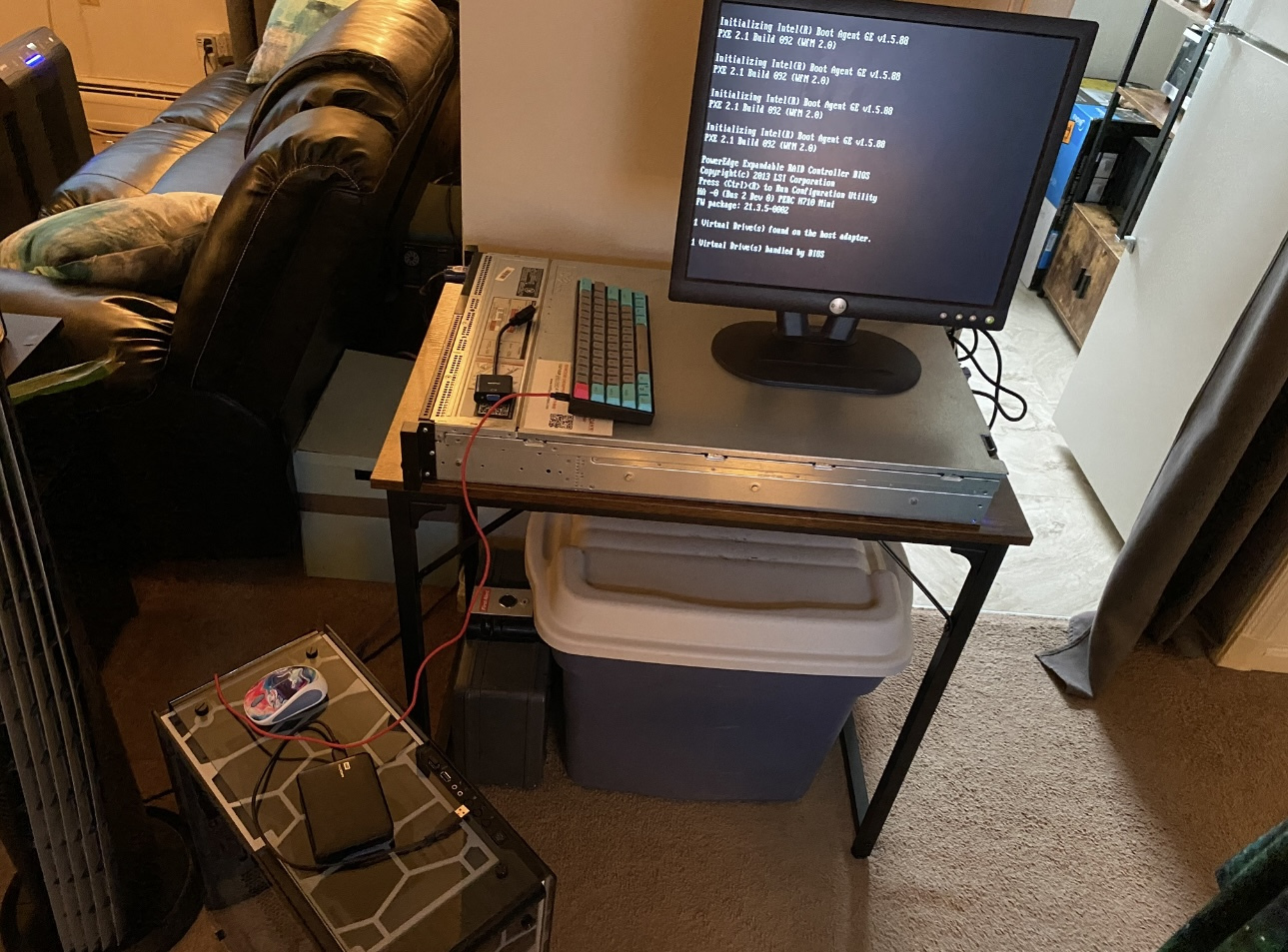

One of the Original Dell R720 Servers (Name "Alice") - 2020

One of the Original Dell R720 Servers (Name "Alice") - 2020

I admit it: I went a bit crazy in this phase. What started as “maybe I’ll get a used rackmount server” turned into three Dell PowerEdge R720 units, a rack, KVM, and other hardware taking residence in my living room. How did that happen? Well, I found a local business upgrading their data centre and selling off old gear at bargain bin prices. One thing led to another, and before I knew it, I had a truck packed with gear, including these three servers (dual-Xeon beasts with tons of RAM), delivered to my door.

To put it in perspective, a single R720 can house dual Intel Xeon CPUs and up to 192 GB of RAM (

Setting Up Dell R720 Server in the Home Lab · Merox

). These were enterprise-grade machines, far more powerful than anything I’d ever touched in a personal capacity outside of work. Suddenly, my humble home lab had the horsepower of a small data centre. I felt like a kid on Christmas, eagerly installing Proxmox and VMware ESXi to explore virtualisation. I set up a Proxmox cluster across the R720s so I could run multiple VMs and even experiment with live migration. At one point, I had dozens of VMs and containers spinning - everything from a Windows Server domain controller (for practice) to an Elasticsearch cluster for logs. My home lab had graduated from a single node to a full cluster of servers.

Of course, reality hit quickly as I heard my wallet crying for mercy. These R720s were loud and power-hungry. The first time I powered them all on, the combined fan noise sounded like a jet engine spooling up – my dog was not amused, nor was my roommate when the circuit breaker tripped. And my electricity bill? Let’s just say I became very interested in energy-efficient computing after seeing that spike. It’s a common trade-off home lab enthusiasts face: you can get retired enterprise servers cheaply, but they’ll get you back in operating costs (

Top Home Lab Trends in 2024 - Virtualization Howto

). I learned to use IPMI and custom fan curves to quiet them down a bit, and I staggered their boot times to avoid tripping any circuit breakers. These were real concerns now; my lab was no longer a toy running on a laptop charger; it was drawing serious wattage from the wall.

Lab Lesson: Enterprise servers offer immense performance for a low purchase price, but they consume a lot of power and space. I ended up installing a dedicated 20A power line for the rack and a beefy UPS to handle outages. Integrating a UPS was crucial to protect against power loss - gave me peace of mind that the servers would shut down gracefully during an outage (

Setting Up Dell R720 Server in the Home Lab · Merox

).

Despite the challenges, this phase levelled up my skills dramatically. I delved into enterprise tech: RAID configurations, dual power supplies, network bonding across multiple gigabit NICs, and using iDRAC (Dell’s remote management) to manage servers headless. My home network also had to evolve; I added a managed switch to handle the many Ethernet connections and even configured VLANs to segregate my lab traffic from the home Wi-Fi (

Homelab Learning: Challenges faced and general housekeeping tips for pfSense | by Evyn Hedgpeth | Medium

). It truly started feeling like I was running a miniature data centre at home. And in a way, I was - complete with all the fun and headaches that come with being the sysadmin of your own little cloud.

Phase 4: Home Lab Meets the Real World – Running Small Businesses (2021-2024)

42U Rack Being Delivered to Adam's Apartment - 2021

42U Rack Being Delivered to Adam's Apartment - 2021

For a while, the home lab was purely a hobby playground. But then an interesting thing happened: I found myself running a small business. First, it was a gaming community, later a game developer hosting service. Later, it morphed into a consulting business, and finally just a game developer hosting service again. I decided to leverage the lab infrastructure to support all of this. I had another set of R720’s ordered and stuffed everything into the 42U rack in my living room. In retrospect, this was both ambitious and slightly insane. Suddenly, my tinkering had real-world stakes – clients, users, and customers depending on services running in what was my living room.

One of these businesses that we had as a client was a small indie game developer studio, and we hosted their staging sites and internal tools on these servers. Rather than paying for cloud instances, I deployed much of this on our internal servers to save costs. But only after covering this with the client and offering them a good price for the services. This was feasible thanks to the robust setup with the R720 cluster - I had the compute and storage to do it. But it forced me to adopt a far more professional mindset toward my lab. No more carefree rebooting everything at whim; uptime started to matter along with cybersecurity.

I implemented strict backup regimes. All critical VMs got nightly snapshots, which were shipped to a NAS and synced off-site to another partner's server. This wasn’t just for bragging rights anymore - it was essential to the business. Although having a robust backup strategy is also crucial in any lab environment, as many will tell you (

The Cost Of Homelab Backups

). I even tested restores occasionally to ensure our business data could be recovered in case of failure. The home lab now had elements of a production environment: monitoring with Grafana to alert me if CPU or memory went haywire, a UPS to ride out short power outages (and automatic shutdown scripts for longer ones), and proper network firewalls in place. I had moved from being a hobbyist to playing the role of an Infra Engineer, ISSE, and IT Manager for a small business, all within my own home, along with offering consulting services down the line!

Scaling Up Reliability: To accommodate the business needs, I introduced redundancy wherever possible. I kept services on separate VMs across different physical nodes, so one server failure wouldn’t take down everything. I also set up a secondary internet connection as a failover. Yes, I convinced my ISP to give me a second line. Yes, it was expensive. These might sound like overkill for a home lab or even a small business, but they proved invaluable when, for example, a PSU died in one server - the workloads successfully failed over to others and the business applications stayed online. Downtime went from an annoyance to potentially losing revenue on a tight margin, so I treated it seriously.

This phase taught me hard lessons in networking and security. To let employees access resources from outside, I configured a VPN server on my network. I hardened the perimeter with a pfSense firewall appliance (running as a VM on one of the R720s), which is a popular open-source firewall often used in home labs (

Home Lab Series: pfSense Setup. Setting Up and Configuring pfSense… | by josegpac | Medium

). I even set up intrusion detection on it. On the network side, I wrestled with advanced configurations: separating guest Wi-Fi, IoT devices, and server VLANs so that a smart lighting vulnerability wouldn’t pivot into our business data. It was challenging, but by the end I had the kind of setup a small office might envy.

Running real businesses on what was essentially still a home lab was stressful at times (my phone’s PagerDuty app buzzed at the least convenient moments), but it was immensely rewarding. It pushed me to refine my DevOps practices: I wrote Infrastructure-as-Code scripts to deploy services repeatably, managed configurations with Ansible, and set up small CI/CD pipelines so that deploying a new version of our website was as easy as a git push. In essence, my home lab became a DevOps sandbox for practising and learning what I preached professionally - everything from continuous integration to automated monitoring got implemented here. The best thing is that since I was building in silence for the most part, I didn’t have a ton of pressure outside of my small business to put these fledgling lessons into practice until I was ready (

Benefits of building in silence.. Why you should build in silence | by Jackson David | Medium

). And if Phase 3 made me a better sysadmin, Phase 4 made me a better engineer and businessman. It blurred the line between “lab” and “production,” preparing me for the next evolution where I’d re-impose that line by modernising my setup.

42U Rack with KVM; Taken Shortly Before Installation of Additional Servers - 2021

42U Rack with KVM; Taken Shortly Before Installation of Additional Servers - 2021

Phase 5: Modernizing – From Loud Servers to Gaming PCs and Pi Clusters (2024)

After a few years, technology marched on, and some of my glorious R720 “big iron” began to feel dated. More importantly, the electricity bills and noise were getting hard to justify, especially as cloud alternatives got cheaper for some workloads. Around this time, a clear trend in the home lab community had emerged: replace the power-hungry behemoths with more efficient, compact systems (

Top Home Lab Trends in 2024 - Virtualization Howto

). I decided it was time to modernise my lab.

The first big change was consolidating some of the workload onto a custom-built gaming PC. I had a high-end rig with an Intel Core i9 and an NVIDIA 2080 Ti GPU that I used for gaming and light AI experiments. I realised this single machine could outperform an entire older server in single-threaded tasks and do so at a fraction of the energy draw. It was a classic case of newer hardware being more efficient: one modern CPU can often do the work of two old ones while using less power. I migrated a bunch of lightweight VMs and Docker containers to this PC (running Proxmox and Docker). The result was liberating: a lot less noise and heat, and a noticeably lower electricity bill each month. The trend of replacing “big iron” with mini PCs or modern desktops was in full swing in my lab, echoing what many others were doing to save on space and energy.

At the same time, I finally fulfilled my earlier dream: building a Raspberry Pi cluster. I gathered a fleet of 6 Raspberry Pi 4 boards (much more powerful than my first Pi) and set them up in a neat stack with a dedicated 1GbE network switch. The idea was to use them as a Kubernetes cluster for learning and for running distributed services. It worked surprisingly well. Using a lightweight K3s Kubernetes distribution, I had a tiny-but-functional Kubernetes cluster blinking away on my desk. This was not just cool for bragging rights, it was genuinely useful. I scheduled home automation tasks and some CI jobs across the Pis, and they handled it gracefully. Plus, maintaining Kubernetes in a home lab setting forced me to truly learn container orchestration in depth - it’s one thing to use Kubernetes at work with a team, and quite another to be the one installing, breaking, and fixing it on your own cluster!

Moving to Kubernetes and modern hardware also meant rethinking how I managed services. Instead of dedicating whole VMs to single applications, I began “collapsing” many VMs into containers and pods, which is a growing trend for efficiency (

VMs vs Containers: Key Differences & Benefits Explained

). For example, I containerised my network controller, media server, and various bots that were running on VMs before. The consolidation was dramatic - dozens of VMs turned into dozens of containers running on just a couple of hosts. This not only saved resources, but it also simplified updates and maintenance. With Kubernetes in place, I achieved a sort of homegrown cloud: I could deploy a new app by writing a manifest, and the cluster would figure out placement and keep it running. This was DevOps heaven for an enthusiast like me, blending coding and ops seamlessly. In fact, running Kubernetes on Raspberry Pis is something of a rite of passage; even industry pros like Jeff Geerling have done it with projects like the Pi Dramble cluster, which ran K8s on a handful of Pis (

Home | Raspberry Pi Dramble

).

During this modernisation, I decommissioned three of the five R720s. I kept two in service for specialised tasks (one became a dedicated storage server with a ton of disks in RAID, and one stayed as a VM host for things that preferred a traditional hypervisor). But for most new purposes, I’d shifted to smaller, quieter machines and clusters. My home lab now had a half-height rack instead of a full one, holding a patch panel, a new silent 10-Gigabit switch (for high-speed links between the main PC, storage server, and AI workstation), and tidy cable management – a far cry from the spaghetti mess of Phase 3.

Adam's Home Office with 42U Rack - 2024

Adam's Home Office with 42U Rack - 2024

Home lab Tip: Embracing DevOps practices made managing this diverse environment feasible. I treated my home lab like a production environment in code. I wrote Terraform configs to provision VMs and Kubernetes YAML to deploy apps. CI/CD pipelines would build and push container images for my custom applications, and Argo CD (a GitOps tool) kept my Kubernetes cluster apps in sync with my Git repos. This level of automation might sound like overkill, but it saved time and ensured consistency. Plus, it was fantastic practice for cloud deployments which I was becoming more and more exposed to in my day job (

A Practical Guide to Running NVIDIA GPUs on Kubernetes |

jimangel.io

).

By the end of this phase, my home lab had transformed. It was now a blend of old and new: a couple of trusty enterprise servers, a powerful custom PC doing double duty as a server, a cluster of Raspberry Pis, and an entirely new layer of software orchestration tying it all together. The lab was leaner, quieter, and smarter. And it was just in time for the next big shift that was sweeping the tech world: the rise of Generative AI, which would heavily influence my lab’s current incarnation.

Phase 6: The Present – Half-Racks, AI Powerhouses, and GenAI Dreams (2024-Present)

Fast forward to today. My home lab occupies half of a professional 42U rack in my home office and one in my living room, and it’s the most advanced setup I’ve ever had. It’s a culmination of all the previous phases, with a clear eye toward the future. Let me paint a picture of the current lab:

-

Networking & Infrastructure: Two half-rack cabinets house my gear neatly. One contains the core networking stack: a rack-mounted router (running OPNsense, a successor to my earlier pfSense setup), a 24-port 10 GbE switch for high-speed connectivity, and a 48V UPS unit that keeps everything online during power blips. The network is fully segmented - VLANs separate my lab, home devices, and guest networks for security. I’ve basically built an enterprise-grade network at home, complete with aggregated links and a Wi-Fi 6 access point.

-

Raspberry Pi Cluster: My Raspberry Pi cluster still lives on, now upgraded with newer Pi 4 boards, and starting to be swapped for the latest Ras Pi 5 model, and an NVMe SSD hat on a couple for fast storage. They sit in the second rack cabinet on a shelf, blinking away. They handle various services: a distributed Node-RED setup for home automation I set up in the past few weeks, a small CICD runners pool for testing my personal projects, and some Kafka + Spark experimentation. Yes, I even tried a tiny data pipeline on them! The Kubernetes cluster spans a couple of these Pis and an Intel NUC, making a mixed architecture cluster. It’s amazing that I can have an “ARM + x86” heterogeneous Kubernetes cluster - something even a few years ago would be exotic. The cluster is primarily for learning and light workloads, but it’s incredibly cool to have. And thanks to Kubernetes, it’s not a pain to manage; the control plane doesn’t care that one node is a $35 Pi and another is NUC, it treats resources and scheduling abstractly.

-

Legacy Dell Servers: I kept two Dell R720 servers as part of the lab, but they’re largely in a supporting role now. One acts as a NAS/SAN, packed with drives and exporting NFS and iSCSI volumes to the rest of the lab. The other is powered off most of the time, serving as a cold spare or for one-off experiments that need many cores or RAM (on-demand lab expansion, if you will). I must confess, hearing the familiar whirl of the R720 spin up for a task still gives me a nostalgic thrill. They remind me of how far the lab has come - from running everything on those, to using them just when needed.

-

GenAI Machines: The stars of the show in the current setup are my two GenAI powerhouse machines. As an engineer, I’ve been drawn to running and fine-tuning generative AI models locally lately. The first machine is a custom-built workstation with an NVIDIA RTX 2080 Ti GPU (11GB VRAM) - my earlier gaming PC that has been thoroughly re-purposed for AI and development work. The second is an absolute beast: a server housing an NVIDIA A100 80GB GPU. Yes, you read that right - I somehow got my hands on a used A100. It was damaged but I ended up being able to repair it, and it’s now crunching numbers in my home office. For context, the A100 is a datacenter-grade GPU, a massive 300W card with 80 GB of high-bandwidth memory, truly a behemoth of a GPU based on NVIDIA’s Ampere architecture (

Stable Diffusion Vs. The most powerful GPU. NVIDIA A100. | by Felipe Lujan | Medium

). This card can do things my poor 2080 Ti could only dream of, like comfortably fitting large language models that require tens of gigabytes of VRAM.

These two machines handle all my heavy AI workloads. I use them to run experiments with large language models (LLMs) and to train smaller models for projects. One evening I might be fine-tuning a Transformer on the A100 (which hardly breaks a sweat with its server-grade cooling and gigantic memory), and the next I might be generating fun images with Stable Diffusion on the 2080 Ti. The difference in capability is night and day: the A100 ploughs through tasks 5-10x faster, enabling me to do in-house what I used to need cloud GPUs for. Generative AI lit a fire under my home lab evolution - ever since the explosion of AI, having local compute for AI has felt increasingly important. The world hasn’t been the same since generative AI hit the scene (

Self-hosting LLMs at Home: The Why, How, and What It’ll Cost You | by Kacper Grabowski | Kingfisher-Technology | Apr, 2025 | Medium

), and that’s reflected in my lab’s design. By hosting AI models locally, I can experiment freely with large models while keeping my data private and local (

How to Protect Your Data with Self-Hosted LLMs and OpenFaaS Edge | OpenFaaS - Serverless Functions Made Simple

), which is both convenient and aligns with data security needs.

Managing these disparate pieces as one cohesive environment is a challenge, but DevOps practices come to the rescue. I use Kubernetes and containerization to tie the lab together where it makes sense. For instance, the two AI machines are also part of my Kubernetes cluster as special nodes with GPU scheduling enabled. This means I can submit a job (like a distributed training run or a Jupyter notebook server for a project) and let Kubernetes allocate it to whichever GPU node is appropriate. It’s the same idea used in cloud AI platforms: abstract the hardware and use an orchestrator to manage workload placement (

What is AI Orchestration? | IBM

). When I run a Jupyter notebook for data science work, for instance processing a heat map of vulnerabilities and their related POA&Ms for an organisation, it doesn’t matter if it lands on the 2080 Ti box or the A100 box - the important part is I get the results and I can reproduce the environment on either. In practice, I often target the A100 for big jobs (because who wouldn’t?) and leave the 2080 Ti for smaller debugging sessions or as a backup GPU to run other tasks in parallel.

Tooling-wise, I’ve adopted many AI frameworks and DevOps tools to streamline experimentation. Docker containers with GPU support are a staple - I use NVIDIA’s container toolkit so that containers can use the GPUs. I run an instance of MLflow for tracking my machine learning experiments and have a local HuggingFace model repository mirror so I’m not re-downloading large models repeatedly, since that results in letters from the ISP (sorry again about that). These are all containerised and orchestrated in the lab. In fact, the lines between development and operations blur completely here. My home lab is where I test the latest MLOps tools on real hardware, benefiting my work projects and satisfying my own burning curiosity.

Despite all this high-tech wizardry, the lab retains a personal, fun touch. I have an “Ops dashboard” on a tablet on a desk, showing a Grafana panel with stats: temperatures, power draw, FPS of any ongoing AI training, network throughput, etc. I often joke that I have my own mini-NOC (Network Operations Centre) at home. It impresses the uninitiated and gives my engineering friends a good chuckle - we all know it’s probably overkill, but it’s our kind of overkill.

Austin Evans GIF

Austin Evans GIF

Reflecting on this journey, I see a microcosm of broader trends: starting from simple beginnings, scaling up with whatever hardware one can find, then optimising and scaling smart instead of just big. Home lab builders everywhere have been shifting from old-school servers to efficient setups and integrating the latest tech like Kubernetes and AI into their labs. We’re living in an era where even a solo engineer can mimic a lot of what large IT environments do, on a smaller scale. My home lab’s evolution - from a creaky laptop to an AI testbed - is proof of that.

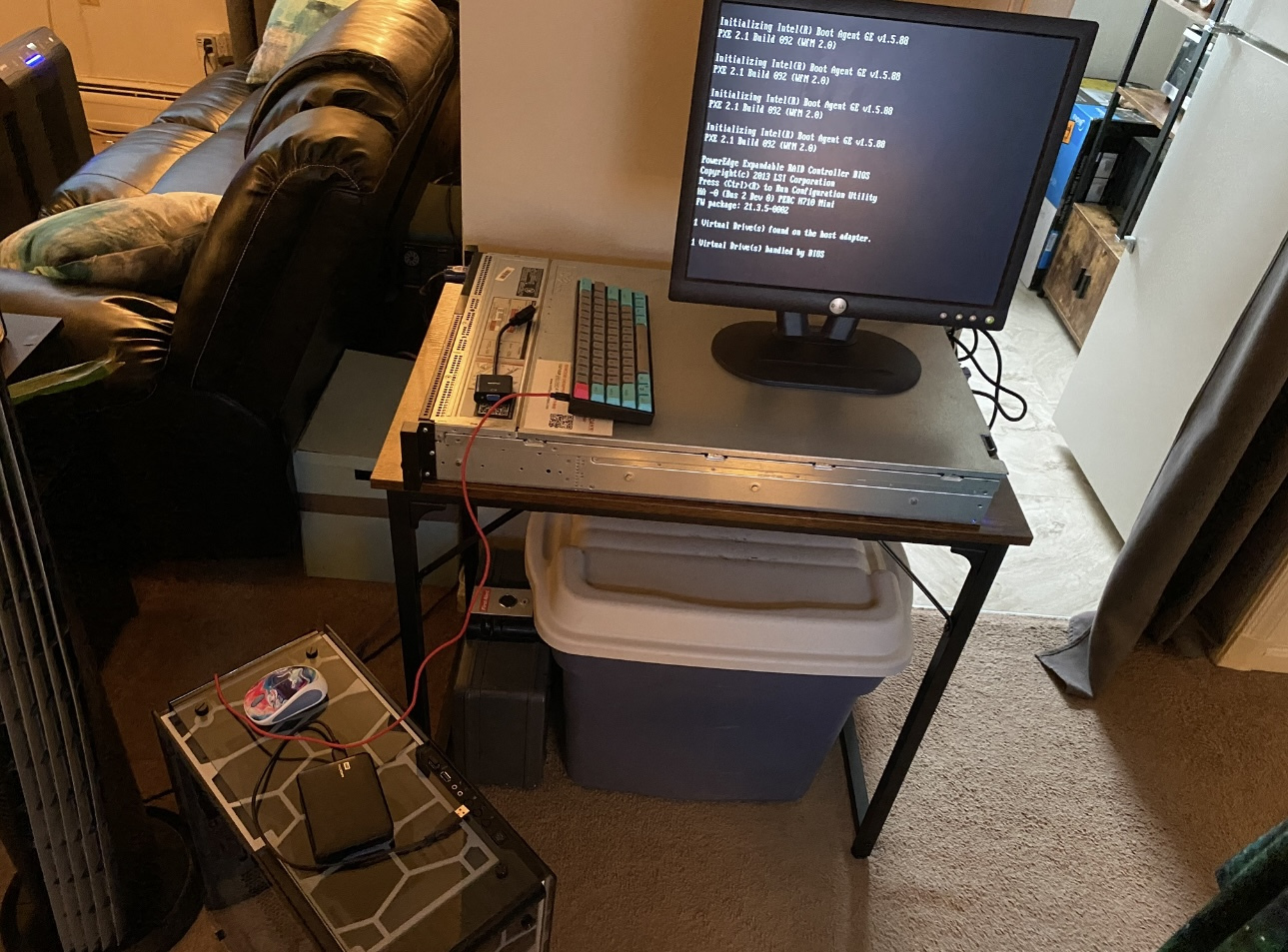

Back to Basics (Sort Of): Half My Current Lab - 2025

Back to Basics (Sort Of): Half My Current Lab - 2025

Conclusion: Reflections on the Home Lab Adventure

Maintaining a home lab has been one of the most rewarding aspects of my career and hobby life. It started as a way to learn and have fun, and it became a personal infrastructure that supported my business and cutting-edge projects. There’s a unique satisfaction in building something yourself: a deeper understanding that comes from racking servers at 1 AM, from troubleshooting why container orchestration broke this time, or from seeing an AI model come to life on hardware in your very own rack.

There were plenty of times at work when I fumbled through an answer on these things, not because I didn’t know it, but because I knew too much and tried to explain all ten edge cases at once. Cue the senior engineers smirking while I verbally tripped over my own brain. On the bright side, it taught me that effective communication sometimes means picking one landmine to step on at a time instead of all of them at once.

Throughout this journey, a few themes stand out:

-

Continuous Learning: The lab kept me always learning - from basic Linux admin on a laptop to advanced Kubernetes and AI workflows on multi-GPU systems. In tech, if you’re not learning, you’re falling behind, and a home lab is a fantastic playground for constant skill growth (

Homelab: Why You Need It and Where To Start | HackerNoon

).

-

Scaling (Up and Down): I learned not just how to scale up (add more servers), but also when to scale down or consolidate gear based on the needs at the time. The move to containers and modern hardware taught me that more isn’t always better - smarter is better. Efficiency, automation, and smart design beat brute force, and the broader community shift to containerization and energy-efficient setups reinforces that (

Scaling IT Infrastructure for Growth

).

-

DevOps and Automation are Key: Without treating my home lab “as code,” I would have drowned in complexity. Embracing DevOps practices - versioning my configs, automating deployments, and monitoring everything - was a saviour. It’s no surprise that DevOps skills are in demand; they allowed me to run a one-person data centre fairly smoothly (

Day55- Building a Home Lab for DevOps Practices | by Sourabhh Kalal | Medium

). The payoff is confidence and competence in both software and hardware realms, something I have embraced recently.

-

Expect the Unexpected: Things will go wrong - disks fail, power goes out, YAML gets misconfigured. My debugging skills and calm under pressure improved vastly thanks to home lab mishaps. There’s a kind of resilience you build when you’ve faced down a network outage caused by your own mistake at 3 AM and got things working again before anyone noticed. These stories become fun anecdotes (in hindsight) and valuable experience for real-world scenarios.

-

Community and Sharing: The home lab community is incredibly generous. I solved countless problems by reading forums, Reddit threads, blog posts, and chatting on Discord with others who trod this path. That’s partly why I wanted to write this article - to share my story and maybe help or inspire someone out there. Whether it’s a tip about taming loud server fans or an idea to run an LLM locally, we all learn from each other. In the end, it’s not just a solo journey but a shared adventure in geekery.

Looking at the rack now, humming quietly (thankfully) in the corner next to me, I can’t help but smile. This setup has come a long way from the scrappy laptop server in my dorm. Who knows what the future holds? Perhaps more cloud integration, experimenting with edge computing, or maybe a quantum computing card will find its way into my rack someday (a man can dream). Whatever comes next, I know the home lab will continue to be my hands-on playground for all things new and interesting in tech.

If you’re thinking of starting or expanding your own home lab, my advice is simple: go for it, start small, and let it grow with your curiosity. Do not let the setups others have spent YEARS building intimidate you because we all started where you are. Keep a sense of humour (you’ll need it when nothing works and you feel like crying), and embrace the journey. Before you know it, you might have your own epic story of servers and code to tell. Happy home labbing, and if you want advice, you know where to find me!